In this tutorial we will explore the use of quantum neural networks in time series function forecasting using PennyLane. We will develop a variational circuit which is a continuous variable quantum neural network that learns to fit a one dimensional function by making predictions. The predictions will be the expectation value of the quantum node.

A quantum neural network (QNN) is a computational machine learning model that involves combining artificial neural networks and quantum information to develop more efficient algorithms. Currently, classical neural networks are challenging to train efficiently if the dimension of data is large. Quantum neural networks can be achieved by adding a quantum convolutional layer or simulating each perceptron as a qubit. The applications of QNNs are often in tasks which require variational or parametrized circuits and training of these neural networks happen in optimization algorithms. This is a type of programming known as differentiable computing.

The computation is implemented using continuous functions and calculating their gradient or derivatives. The gradients represent the rate at which to change the parameters of variational circuits so as to output expected value.

We will import the time series dataset. It should be placed in the same folder as this code. The dataset will be attached on Github.

Define the device to run the program. We will be using strawberryfields.fock, with 1 qubit or wire.

We will define the quantum layer using the algorithm presented in this paper

We will encode the input x into a quantum state and execute the layers which will act as sub circuit of the neural network.

The objective will be the square loss between target labels and model prediction and the cost function will be the square loss of the given data or labels and predicted outputs from the quantum neural network which will be the expected value of the quantum node

The weights of the network will be defined with values that are generated from the np.random.randn function will follows a normal distribution

Using the Adam optimizer, we update the weights for 500 steps . More steps will lead to a better fit.

We will use the values of the predictions in the range [-1,1]

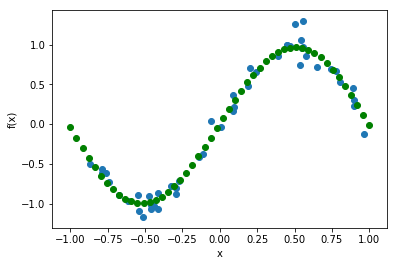

We will plot the shape of the function that the quantum neural network has “learned” from the function and the predicted values. The green dots represent the model's predictions and the blue dots is what the actual function was

We can see that the quantum neural network was accurate in predicting f(x) for a particular x by developing a variational circuit and varying the parameters or the weights using the Adam optimizer.