Quantum computers have been long hailed as the future of complex calculations. Major companies, such as Volkswagen and IBM, have sought to harness the underlying concepts of quantum computing based algorithms for commercial applications.

Mere searches for the future of quantum computing on an everyday search engine will return numerous articles, papers, and posts trumpeting the potential for quantum computers. Yet there also exists an underlying tone of caution, as many have taken the additional step of pointing out current, existing limitations of dealing with such systems.

Recent media has been a part of the increasing attention being directed towards quantum computing, some of it positive, some of it not. In March 2021, BBC reported that a Microsoft-based team had in fact retracted what was in 2018 hailed as a “revolutionary” paper for quantum computing. The paper had proposed evidence supporting an alternative method of creating qubits (Majorana particles, first proposed approximately nine decades ago by Ettore Majorana, an Italian physicist).

Unfortunately, several errors in the scientific process and presentation warranted a retraction of this claim, prompting Zulfi Alam, vice president of Microsoft, to write in a LinkedIn post, “This is an excellent example of the scientific process at work.”

Of course, researchers have certainly not thrown the idea of quantum computers out the window. With the continued refinement and advancement of our knowledge regarding quantum computing, the question persists: how close are we, truly, to a quantum computer?

Do Real Quantum Computers Exist?

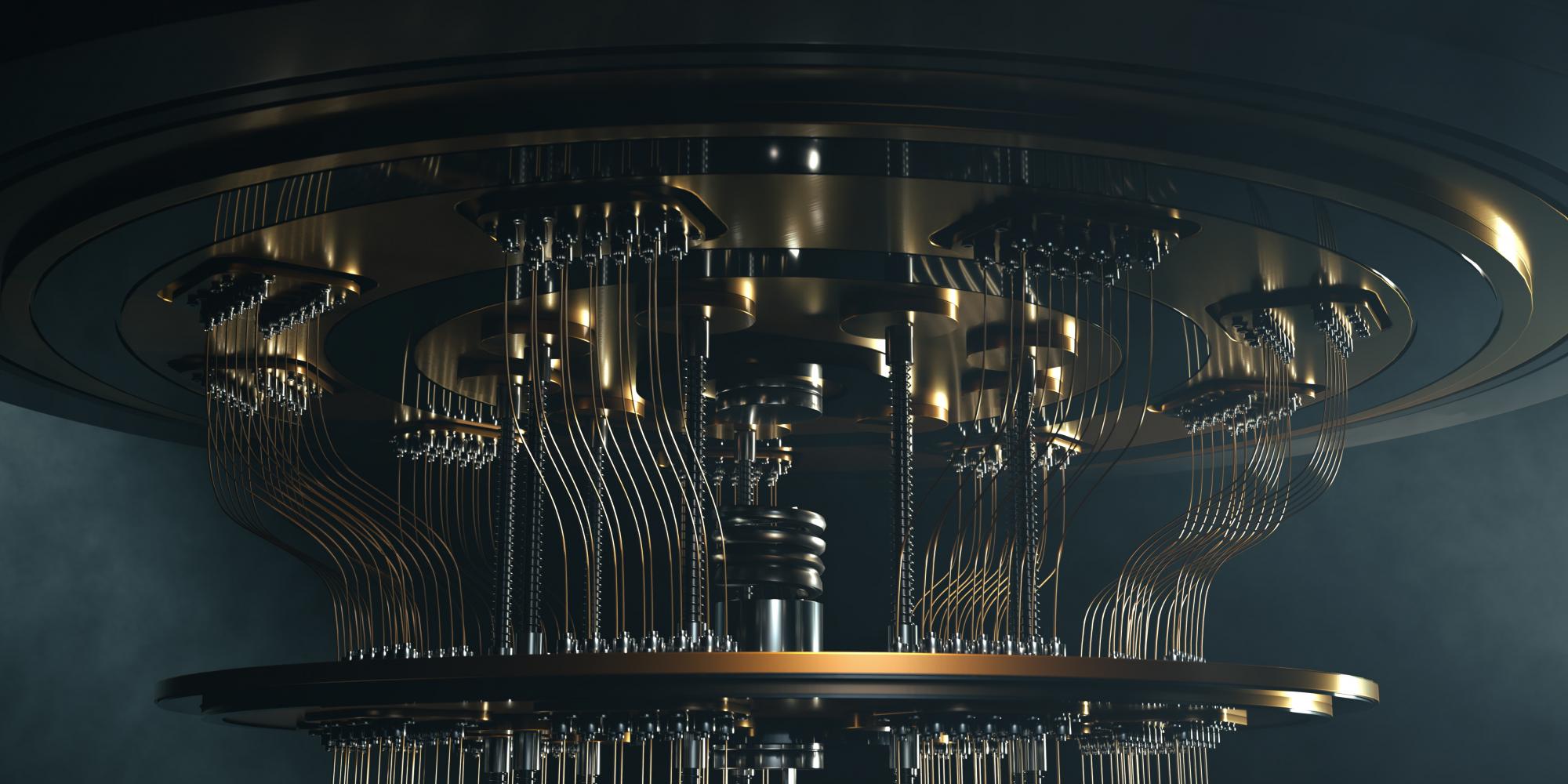

According to IBM, a quantum computer containing 275 qubits would permit it to “represent more computational states than there are atoms in the known universe”. The first quantum computer was successfully operated in 1998. Today, several mainstream companies have invested billions of dollars in the development of quantum computers. A few of such instances are included below.

In September of 2020, IBM revealed they developed one of the largest existing quantum computers in the world (as of the time this blog post was published), consisting of 65 qubits. The company has already identified a number of areas in which the complexity of quantum computing capabilities may be applied.

In 2018, Intel introduced “Tangle Lake”, a processor that is composed of 49-qubits. The processor’s name was not based on the (ahem, wonderful) film starring the long-haired princess. It was instead inspired by the processor’s use of entanglement and environmental requirements. More specifically, like quantum computers developed by the likes of IBM and Google, exceedingly low temperatures are required to permit the entanglement of the particles (as well as minimize decoherence), allowing for rather complex calculations to take place. Note here that “entanglement” is precisely what its name implies: that is, one particle is able to “communicate” with its counterpart, such that if the state of one is altered, its partner is affected as well.

Furthermore, Intel has also elected to simulate quantum computing using classical supercomputers, so as to run tests of developing code. The “Stampede” supercomputer at The University of Texas at Austin (completed in 2017) is used to do just that. The supercomputer itself is capable of simulating at most 42-qubits.

Challenges of Quantum Computing

Of course, the design process of a quantum computer in what is still the early years of this field does not come without its challenges. Quantum computers utilize qubits as their primary units, much as bits are considered the units of classical computers. Classical computer bits, however, are limited to two states, 1 and 0. Qubits, on the other hand, ideally operate on the subatomic scale, harnessing properties of quantum mechanics, such as spin, that allow them to represent the states of 0 and 1 at once due to superposition.

A large part of this centers on being able to control the infamously flimsy qubits. The existence of seemingly insignificant environmental factors can throw the qubits for a loop (not literally, of course), leading to the loss of information. This decoherence can result from even slight interference from, for instance, a nearby electromagnetic field.

To minimize decoherence, qubits often require maintenance at a mere tenths above absolute zero -- approximately 20 millikelvins, or -273.13 degrees Celsius. Absolute zero is at approximately -273.15 degrees Celsius. For reference, deep space is approximately 2.7 kelvins, or -270.45 degrees Celsius.

The requirement for rather extreme conditions to maintain stability, even if for only a few seconds, has made building a quantum computer notoriously difficult. In 1998, nearly two decades after Richard Feynman and others first hypothesized a possible quantum computer, the world’s first quantum computer was created by researchers from the Los Alamos National Laboratory, MIT, and UC Berkeley. However, this computer had a capacity of only two qubits. Even with more recent developments and methods, the fragility of the system has greatly limited the length of time these quantum computers have been able to remain in operation before the results become unusable “noise”.

From a theoretical perspective, there exists the question of whether quantum computers do indeed have an absolute leg-up over classical computers. The quest to prove quantum supremacy has been a partial source of motivation to continue in pursuit of creating a quantum computer.

Google (Alphabet) had, early on, taken a dive into the area by constructing a 53-qubit quantum computer. Both fortunately and unfortunately, the initial results exemplified the large role decoherence played in affecting the calculations made by their system. As Greg Kuperberg of UC Davis explained to the Science Magazine (AAAS), “their demonstration is 99% noise and only 1% signal.” The patterns had to be carefully discerned from the information the quantum computer returned. Nevertheless, the Sycamore processor, as the 53-qubit computer by Google came to be called, took a mere 200 seconds to accomplish the solving of a problem that had initially been projected to take significantly longer for classical supercomputers. (Although more recent methods of computation proposed in March 2021 have been able to simulate the processor on a classical computer.)

Despite the train of apparent issues encountered, researchers continued to persevere, applying the principles of error correction. The field itself was first pioneered by mathematician and quantum physicist John von Neumann.

It is worth noting, however, that the area is still in need of technologies that can effectively take full advantage of a quantum computer, namely sharing and storing the information. While further exploration into error correction and minimization of decoherence has persisted, several other major technological companies have made several developments into the construction of quantum computers.

Industrial Applications: Commercialization of Quantum Computing

The sheer levels of complexity at which quantum computers are capable of operating is astounding and certainly pushes the boundaries of human comprehension. This promised complexity has proved lucrative for various industries, not just current technology magnates.

As technology continues to advance, an argument has been made that scientists may be able to develop systems that can both recognize and mimic human systems. Consider, for example, exceedingly versatile voice recognition systems capable of processing information at vast speeds and with the accuracy of a mother recognizing her child’s voice. As can be noted, the human factor introduces a rather dynamic variable (full of “what-ifs”) into the situation, and classical computers will often have difficulty adjusting accordingly. Thus, quantum computers have proven to be promising in the fields of artificial intelligence and machine learning.

A second, rather promising field lies in computational chemistry, which has been notoriously difficult to model. As with other areas, the hope has been to one day utilize quantum computers to compute and map out molecules accurately in a reasonably efficient amount of time -- as opposed to, say, the thousands of years such problems are projected to take on classical computers.

In August 2020, Google AI Quantum released a research article in the AAAS journal Science explaining its findings in utilizing a smaller, 12-qubit version of its Sycamore quantum processor to conduct a series of computational chemistry problems. The researchers ran a simulation of the Hartree-Fock procedure (relating to the Hartree-Fock theory).

They were able to model the energy state of a 12-hydrogen atom molecule (12 for the 12 electrons -- one per hydrogen atom -- and, accordingly, 12 corresponding qubits). From there, the Google AI Quantum team managed to simulate a reaction involving both hydrogen and nitrogen atoms.

At the surface level, the prediction of how a certain reaction will progress seems elementary. Years of research have established rules that govern these reactions. However, the how of these reactions is considerably more difficult to discern (and becomes rather instrumental in, for instance, pharmaceutics). The model constructed by the researchers using the quantum computers allowed for the determination of measurable results, such as time of reaction, depending on factors such as temperature or molecule concentration.

Granted, some have suggested taking these developments with a grain of salt. For one, these computational chemistry problems and the Hartree-Fock procedure have been simulated on classical computers. Furthermore, the 12-qubit quantum computer relied on a classical computer to sift through the results and refine each of the subsequent run-throughs of the simulation (machine learning).

The question of quantum supremacy has become increasingly pressing. Even more recently, on March 4, 2021, Feng Pan and Pan Zhang of the Chinese Academy of Sciences and University of Chinese Academy of Sciences in Beijing, China published a paper that seemed to throw the progress out the window. The paper described an alternate method of processing that was able to simulate the 53-qubit Sycamore processor rather effectively on a classical computer, thus allowing the supercomputer to essentially perform many of the same calculations this processor was experimentally shown to perform.

Nevertheless, not all hope was lost. Ryan Babbush, a researcher on the Google project, says of the quantum-chemistry experiment, “It shows that, in fact, this device is a completely programmable digital quantum computer that can be used for really any task you might attempt” (as said to the Scientific American). The ability to apply quantum computers to a wide range of applications means the fields of cybersecurity, financial modelling, and optimization, among others, are gradually integrating quantum computing technologies into their operations.

Many have been and are a part of the trek upwards in hopes of finding a superior form of computation a reality, and while no path is without trials, researchers, companies, and others have been striving to achieve their goals. While quantum computing certainly has not achieved the classical computer status of widespread use and ownership, much less covered all technologies needed to fully maximize the capabilities of quantum computers, it has the potential to become a powerful tool in industries worldwide.