The most lucrative aspect of quantum computing is that it shows the potential to solve certain problems that classical ones cannot. Although we are in the NISQ-era of quantum computers, dominated by noise, Google Quantum AI and its collaborators have confirmed their success in bench-marking the Sycamore Processor- which, acclaimed by many, is the first ever experimental realization of Quantum supremacy.

I [therefore] believe it's true that with a suitable class of quantum machines you could imitate any quantum system, including the physical world. ~ Richard Feynman.

This statement reflects the prime motivation behind building quantum devices. The idea to merge information theory and quantum mechanics dates back to the 1980s, but could gather little attention. When, in 1982, Richard Feynman in a lecture showed limitations of classical machines to simulate real-world quantum phenomena, the hype around quantum computers rose. Eventually it dispersed only to rise again in the year 1994, when Peter Shor proposed an algorithm that could, in theory and with ideal quantum machines, factorize prime numbers efficiently- indicating threat to the present-day encryption system. The key take-away is that quantum computers are able to compute what classical computers cannot or, even if they[classical] can, it is not possible under a realistic time-frame.

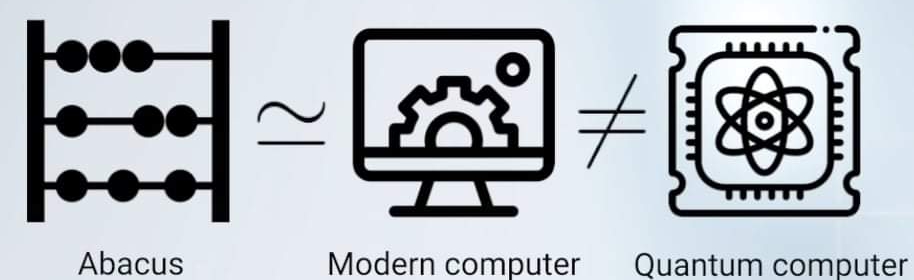

The demonstration of quantum supremacy falsifies the Strong-Church Turing thesis, according to which all universal computers can efficiently simulate each other. But in the case of quantum computers this claim essentially does not hold to be true since quantum machines can solve problems beyond the classical realm.

To solve any classically-intractable "useful" problems, however, requires quantum devices first to be able to solve "any" intractable problem at all, whether or not useful. On the same thread, Google quantum AI worked on solving a specific problem, specially tailored to demonstrate Quantum supremacy, which lies right on the boundary of classically simulatable and beyond classically simulatable realm. In the heart of this research is a new processor called 'Sycamore'.

Sycamore processor: What makes it different?

The Sycamore processor is based on transmon superconducting qubits. While the conventional chips are 2-D with readout wires taking more than half of the area, sycamore is totally a re-engineered design. The qubit array chip is in fact 2-D, however, it is stacked "on" the control chip (with all control and read-out wires). This specific design that makes sycamore way more scalable than its counterparts, is called "Flip-Chip" design.

The chip is then embedded on the circuit board of superconducting metal and refrigerated in the dilution fridge to cool it under 10 millikelvin.

Another instance of brilliant engineering is seen in sycamore's qubit connectivity. "Tunable coupling architecture" in it established configurable connection by turning the coupler-transmons "on" and "off". In a rectangular array of 54 qubits, 88 transmon couplers formed connectivity among each qubit pair that allowed qubits to be interdependent/ independent at will.

Calibration and Bench-marking

Calibration is basically the process of executing quantum logic with hardware so that we can run an arbitrary quantum algorithm. To make this possible, we are required to find the best working parameters for the drive (qubit+readout). Given that there are around 100 parameters to be tuned for each qubit, calibration becomes quite challenging with the increasing number of qubits and qubit-gates. Crucial two steps of calibration are 1) Measuring qubit decoherence time Vs frequency and 2) Finding an optimal qubit frequency for each qubits. Then again, for an individual qubit, amplitude for a "Pi-Pulse" to oscillate between 0 state and 1 state needs to be found out through Rabi-experiment. (More about drive amplitude and readouts here). Another important parameter to tune is 'resonant swapping between each qubit couplers".

In the quantum supremacy experiment, they used the "Cross entropy" method for bench-marking. Bench-marking, in simple terms, is a way to figure out how well the calibration works. For this, they randomly selected a bunch of sequences (quantum-circuit) containing one qubit and occasional two qubit gates (i-Swap gates). Each of these sequences were then run on the quantum processor around a few thousand times to fetch experimental probabilities for individual sequences. Then, with the help of a classical computer, the same sequences were simulated to come up with theoretical expected probabilities. These two probability distributions were then compared to calculate an estimated fidelity of the quantum gates, which is a measure to quantify "how well" the gates are performing. Finally, the gate-unitary(s) were then optimized in a feed-back loop to maximize the fidelity. This helped them to figure out and correct possible sources of error.

Cross entropy, however, becomes easily classically-intractable when all 53 qubits are sampled simultaneously, because the number of amplitudes becomes 2^53 ~ 10^16. So instead of computing and comparing all those probabilities, they sampled (simulated partially to infer about the whole circuit) the expected probability distribution. This estimation mapped how often they sampled "high-probability" bit-strings.

Computational task

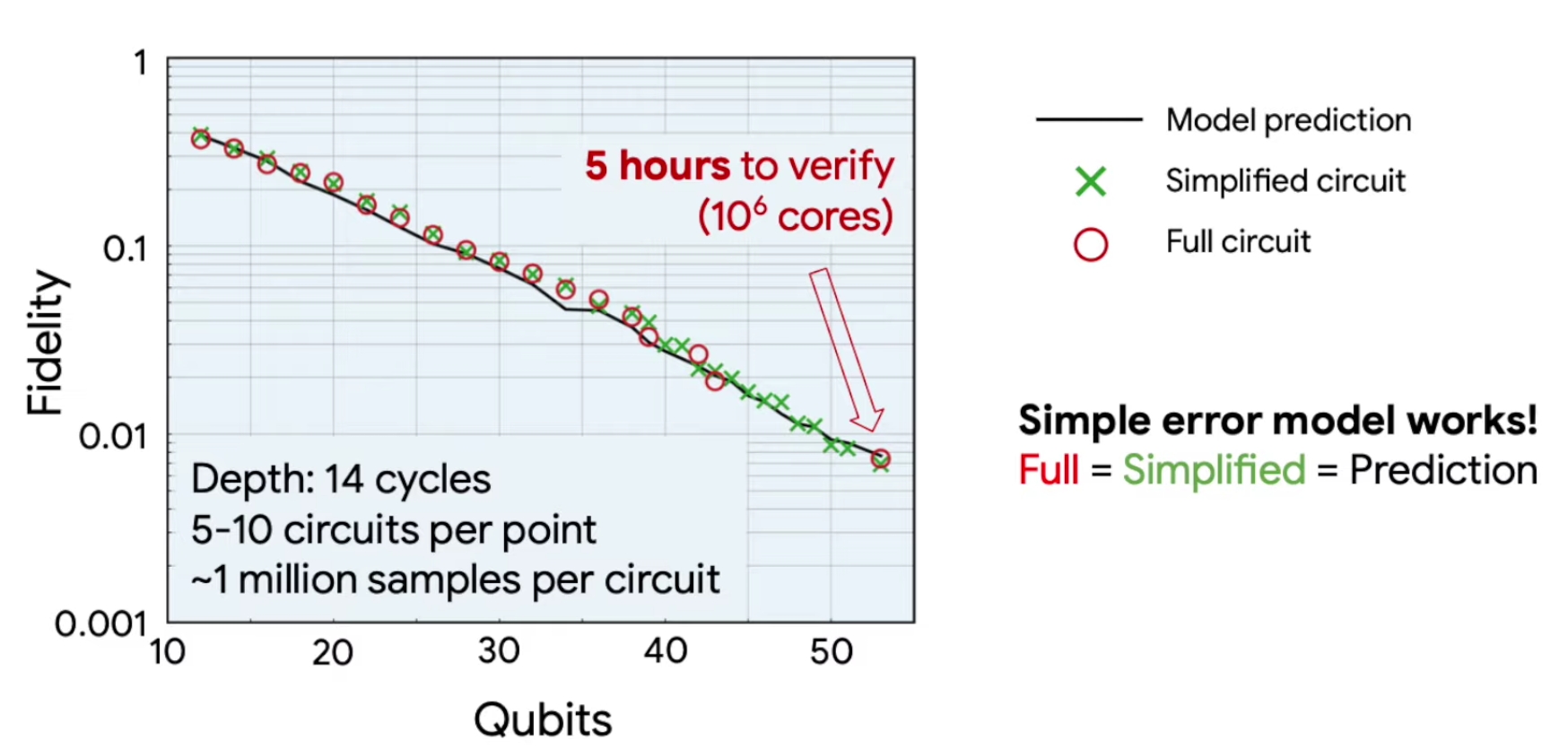

With the ensemble discussed earlier, the researchers were then left with the final task : competing against a supercomputer. For this experiment, they chose to sample output of a sufficiently large pseudo-random quantum circuit for which the cost for computing classically becomes prohibitively large. A potential problem that they faced at this stage is fidelity calculation. On one hand they were trying to exceed classical computation, on the other, they needed classical simulators to confirm the result. In order to overcome this challenge they came up with a new idea of controlling circuit difficulty. This can be done by 1) changing size of the circuit 2) by removing some gates to reduce entanglement and 3) by changing the order of execution/ by simplifying the gates. Experiment showed that with a circuit depth of 14 cycles, the full, simplified and predicted error models were sufficiently consistent. Though the circuits were simplified, the problems soon became intensive for classical computers: the simplified circuit for 53 qubits took about 5 hours to verify using a supercomputer.

You can also check out all the data from the experiment for free on this website.

For contrast, researchers also tested the benchmark on multiple supercomputers for some specific algorithms. For one of the tests, Schrodinger-Feynman algorithm, Summit supercomputer of Oak Ridge National Laboratory estimated to take about 10,000 years to run using 1 petabytes of memory which the sycamore processor solved in about 200 seconds.

Debates surrounding the claim

The research article by Google Quantum AI and its collaborators that was published in Nature in September 2020, however, has also stirred up some criticism and even contention. IBM, for example, has dissented, saying Google hasn't achieved supremacy because "Ideal simulation of the same task can be performed on a classical system in 2.5 days and with far greater fidelity."

In this context, John Preskill states- "In the 2012 paper that introduced the term 'quantum supremacy,' I wondered: Is controlling large-scale quantum systems merely really, really hard, or is it ridiculously hard? In the former case we might succeed in building large-scale quantum computers after a few decades of very hard work. In the latter case we might not succeed for centuries, if ever.” The recent achievement by the Google team bolsters our confidence that quantum computing is merely really, really hard. If that’s true, a plethora of quantum technologies are likely to blossom in the decades ahead."

Moreover, supremacy or not, the brilliantly designed sycamore processor in fact provides a much-needed leg-up especially for Near-term quantum computers. Because, this specific bench-marking will pave newer ways to utilize quantum machines in noisy environment. Many researchers are optimistic that, this milestone will guide us to attempt solving practical-oriented problems with Near-term devices.